Become a certified professional

Find out how to start and advance your career in open source.

Hire only certified professionals

Find out why hiring only certified professionals is good for your business.

"See these resources not only as instruments for professional development, but as key knowledge in a world increasingly permeated by technology."

- Luciano Siqueira, Brazil

Author and Teacher in the field of IT Training and part of the LPI’s Learning Portal Team

"Thanks to the localization process I got to learn a lot more about command line tools and I’ve even incorporated some of this new knowledge to my other jobs."

- Julia Vidile, France

Ghostwriter and Copywriter, Learning Materials Author and Translator

"Your learning is the best investment that you can ever make in your career."

- Andrew Mallett, United Kingdom

Founder of The Urban Penguin, Pluralsight Author, Learning Tree International Freelancer and part of the LPI’s Learning Portal Team

How to advance your open source career

Linux Professional Institute (LPI) is the global certification standard and career support organization for open source professionals. With more than 200,000 certification holders, it’s the world’s first and largest vendor-neutral Linux and open source certification body. LPI has certified professionals in over 180 countries, delivers exams in multiple languages, and has hundreds of training partners.

IT certifications and what they can do for you.

Find out which certification is right for you.

Check out our study and exam resources.

Get involved. Join the LPI community.

Events

Linux Professional Institute (LPI) supports Open Source Day Warsaw ’24

April 18, 2024 The 13th edition of Open Source Day is scheduled for April 18th in Warsaw – registration is now open! Open Source Day in Warsaw, the largest conference in Poland and Central-Eastern Europe dedicated to open technologies, features ... Read more

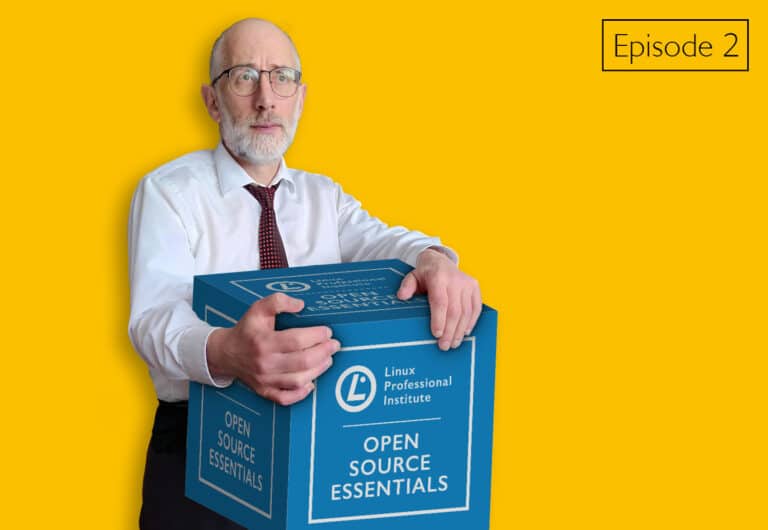

News

Linux Professional Institute Launches the Open Source Essentials Education Program

Toronto, 04-04-2024 – Linux Professional Institute (LPI) announces the launch of Open Source Essentials, a certificate and education program targeting the common set of knowledge everyone with a role in open source should have. “Open source is nowadays used in ... Read more

Blog

Roles in Open Source: Bringing Order to the Chaos

By nature, software developers – and especially open source software developers – tend to value their independence. And like all of us, they each have opinions about how things should be done. So dealing with disagreements can be all part ... Read more

Regional Support and Offices

LPI works with organizations around the world to ensure the growth and adoption of Linux, open source and Free Software. Learn more

Learn more

Preparing for Your Exam

Here you can find everything you may need to prepare for you LPI certification.

Learn more

Success Stories

Read the success stories of our certification holders and partners and learn what they have gained from Linux Professional Institute (LPI) certifications.

Learn more

Get news, event invites, and updates.

Get the latest news, tips, certification updates, event information and special community offers and surveys, delivered straight to your inbox from the Linux Professional Institute.

"*" indicates required fields

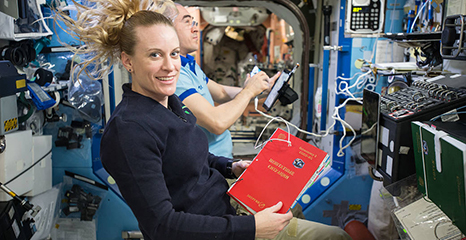

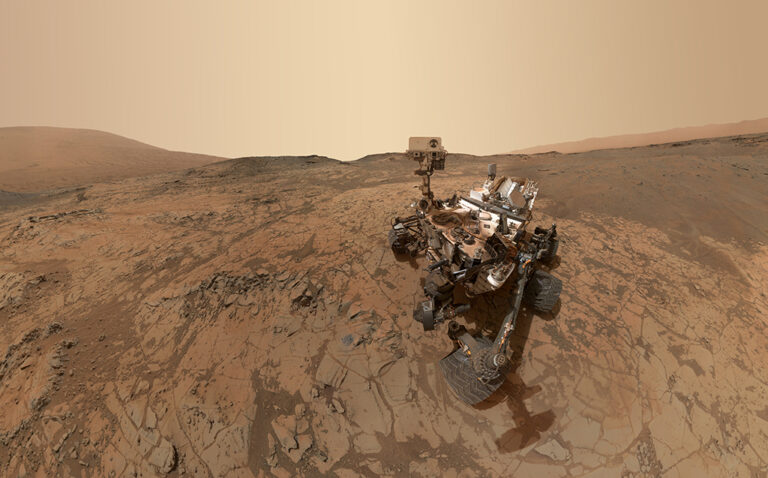

The most interesting projects in the world run on Linux.

Join the community of Linux professionals who are making incredible things happen with Linux and open source around the world.

Thanks to our Mission Supporters for all their support.

Would you like to become a sponsor? Contact us