TLS, Post-Quantum, and Everything In Between at LFNW25

Some talks start as slides. Others start as hallway conversations, scan results, certificate errors, and someone in the team asking: “Hey Ted, do we really need TLS 1.3?” By the time I finished preparing this talk for LinuxFest Northwest 2025 — in what was also the 25th anniversary of both LFNW and LPI — it was clear we needed a post on security in the quantum computing age that would reach beyond the room.

My quest isn’t just about standards. It’s about making sure that the web we build — especially in FOSS — is one step ahead of what’s coming. Even when what’s coming is… quantum.

As I was at LFNW (see full report here), I was happy and proud to give the talk “Securing Your Web Server with Post-Quantum Cryptography.” This blog post is a rethinking of that session, adapted to this medium, and maybe a bit easier to search and share.

Let’s dig in.

SSL, TLS, and That Whole Mess

Yes, we still call them SSL certs. But SSL has been deprecated for almost 30 years.

Today, if your setup isn’t using TLS 1.2 at a minimum (or hopefully TLS 1.3) you’re already in trouble.

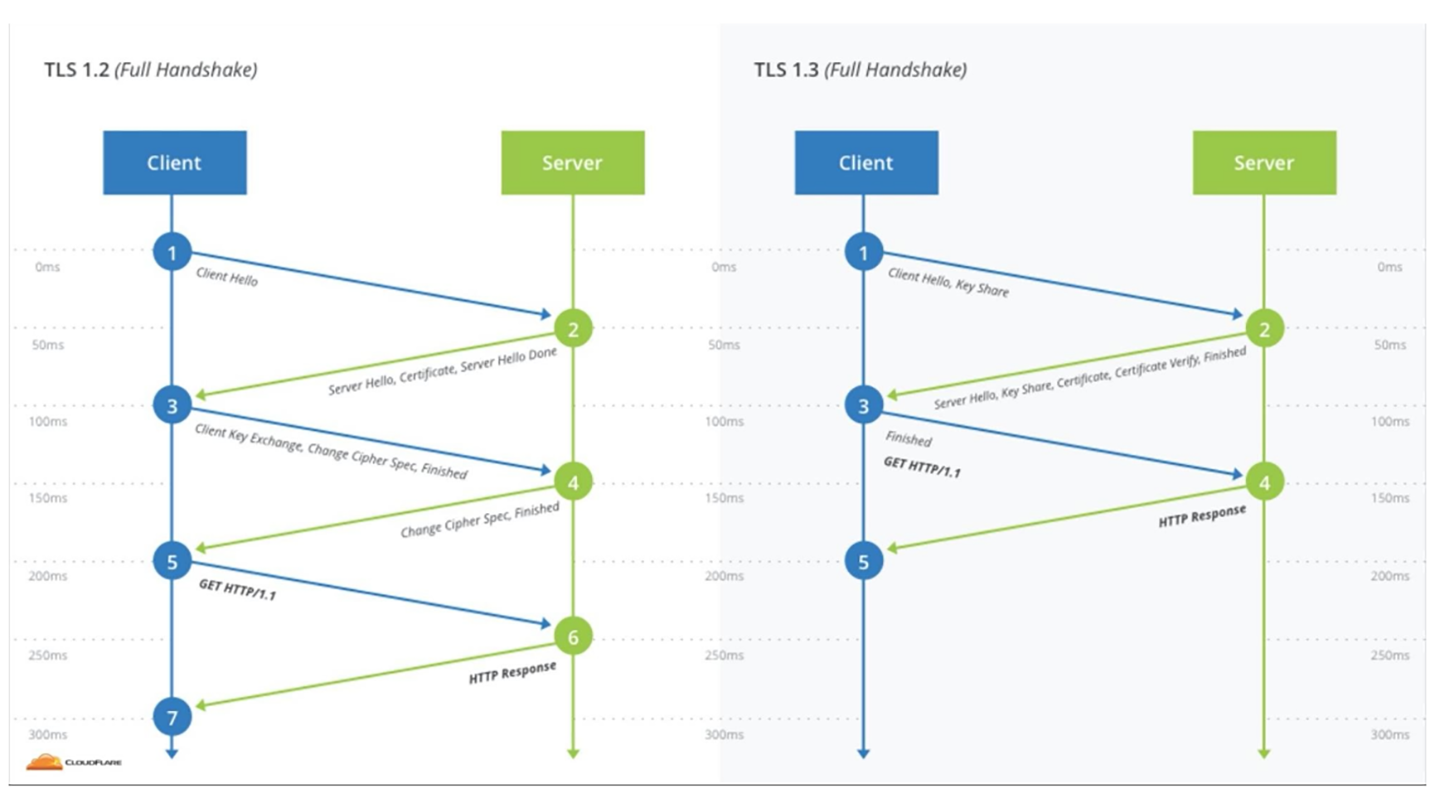

TLS 1.3 isn’t just cleaner — it removes old baggage (like RSA key exchanges, which lack the forward secrecy in Diffie-Hellman), enforces better cipher suites, and lays the groundwork for post-quantum support. TLS 1.3 introduces a more efficient handshake between the clients and servers, as shown in Figure 1 (courtesy Cloudflare site):

Figure 1 Difference between opening handshake in TLS 1.2 and TLS 1.3.

Because that’s where we’re going. Fast. Every time I read about a new breakthrough against RSA with quantum computers, the attacks are against larger and larger numbers. And the actual situation may be even worse than the reports, because the published reports and studies we get don’t include state actors or those wishing to not publicly disclose their achievements.

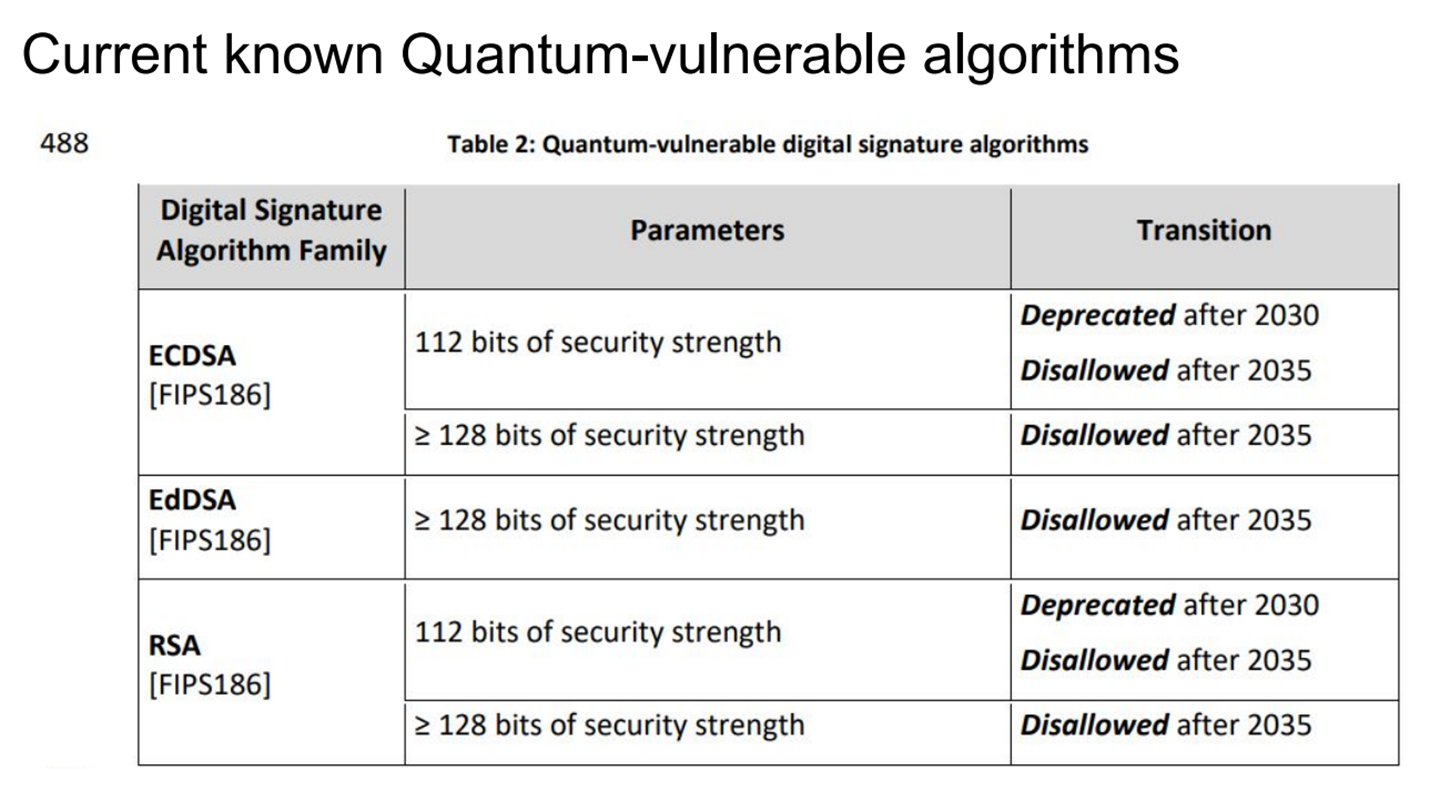

I certainly don’t know on what date our widely used RSA 2048-bit will be broken. But many feel that NIST’s recommendation to deprecate RSA and ECDSA by 2030, and disallowing them after 2035, is reasonable, given the release of open source tools such as OpenSSL 3.5 that allow sites to migrate off of many of today’s quantum-vulnerable ciphers.

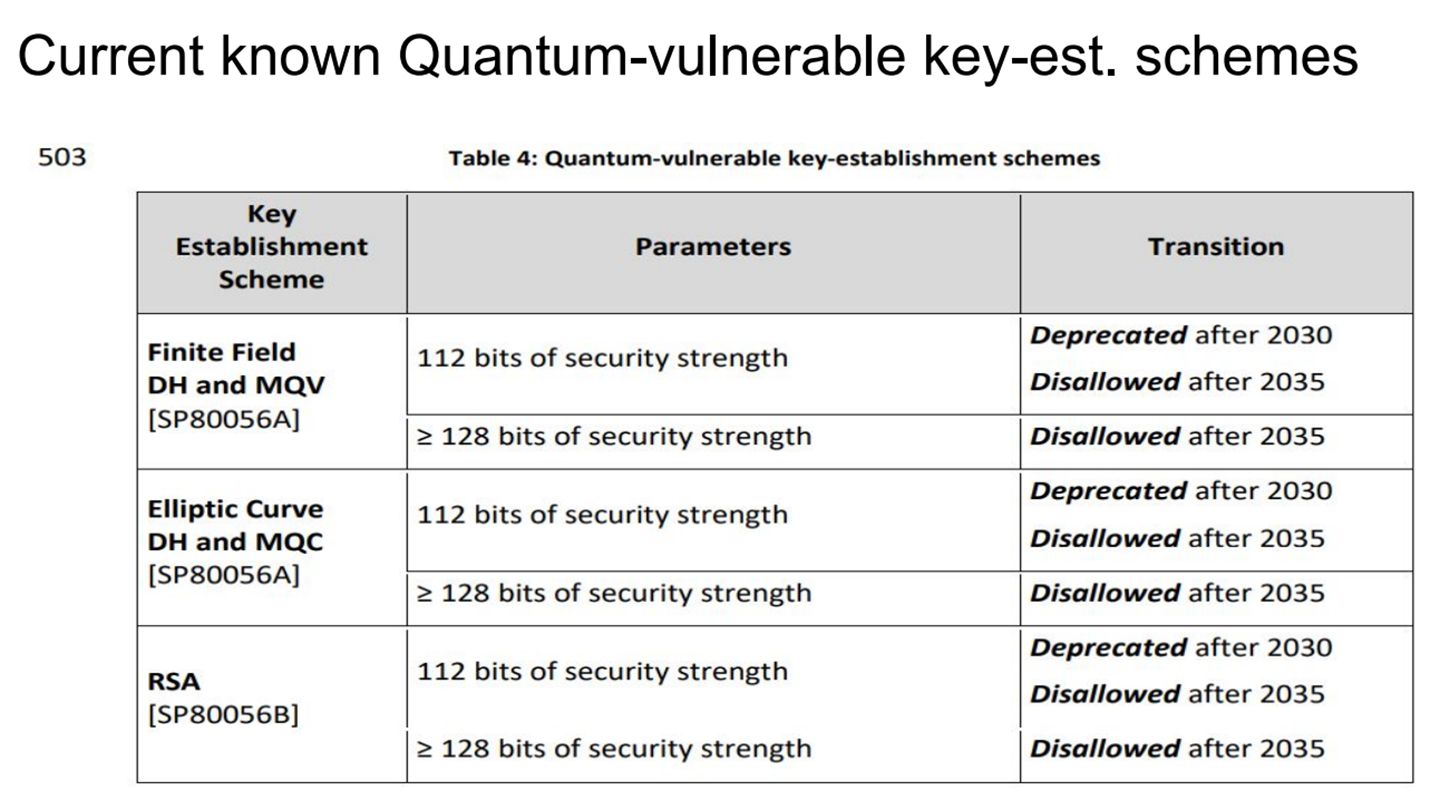

These tables show the timelines for migrating away from vulnerable standards:

Current known Quantum-vulnerable algorithms

Current known Quantum-vulnerable key-est. schemes

NIST has been working with cryptographic researchers around the globe for almost 10 years to finalize drafts for new quantum-resistant ciphers, and in many cases backups, in case unnoticed vulnerabilities turn up in the initial choices.

What We Mean by “Post-Quantum”

Post-quantum cryptography (PQC) isn’t a buzzword. It’s a practical response to a looming issue: what happens to our encrypted traffic when quantum computers hit scale?

You don’t need to guess. Attackers are going to harvest now, decrypt later.

Which is why I focused the second half of my session on Module Lattice-based Key Encapsulation Mechanism (ML-KEM), Module-Lattice-Based Digital Signature Algorithm (ML-DSA), and HQC: algorithms vetted by NIST and already partially deployed in hybrid mode by Cloudflare, Google, and other cloud vendors.

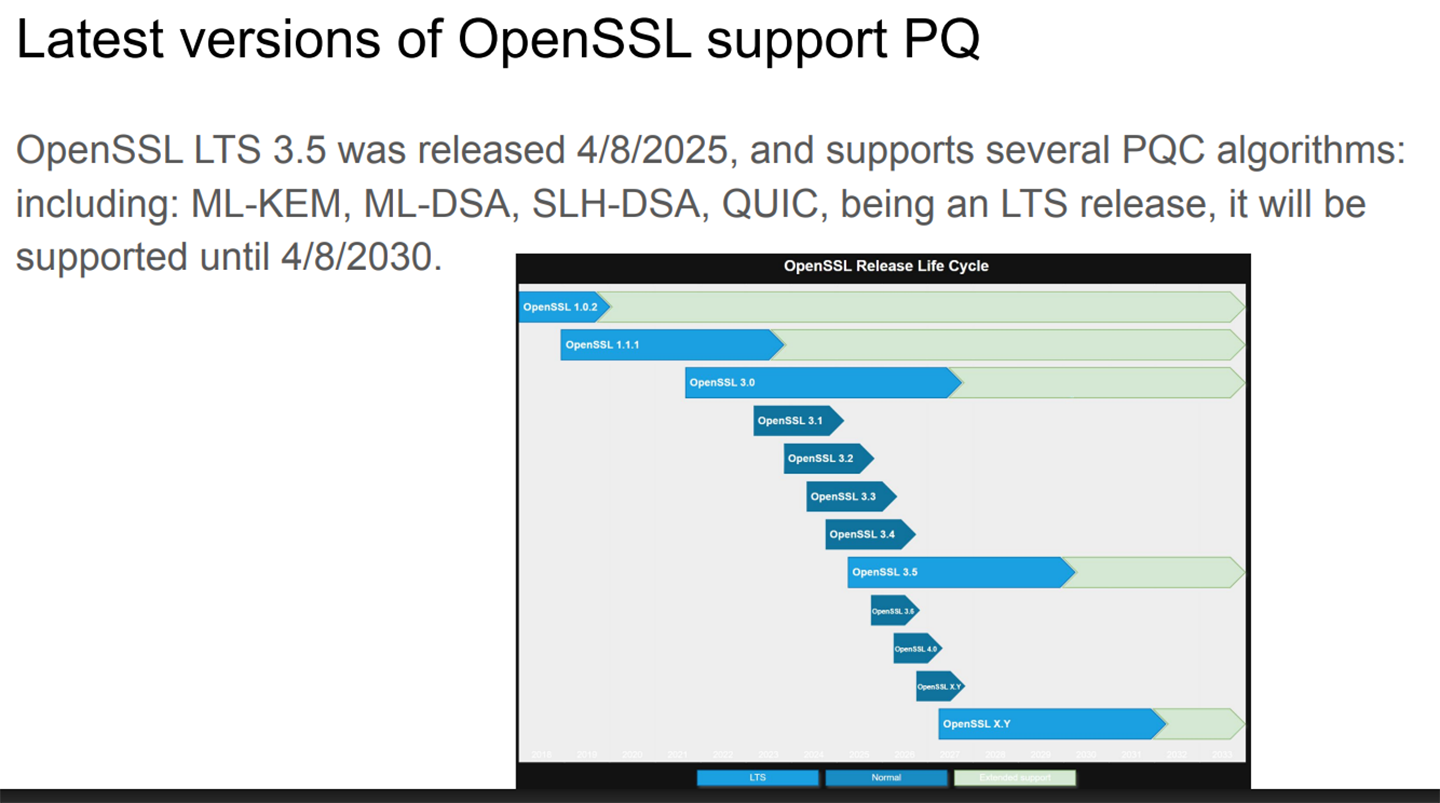

If your current server setups support OpenSSL 3.5, you’re already in a good place. If not, you have work to do, and should begin planning and testing now.

Figure 2 shows the trajectory for OpenSSL.

Figure 2. OpenSSL LTS versions (longer bars, light blue), will be supported for 5 years

If your work doesn’t involve configuration or securing of web servers or API endpoints using TLS, you may not need to implement OpenSSL 3.5 to benefit from post-quantum security. The latest versions of Firefox, Chrome, and likely other widely used browsers currently have built in support for the ML-KEM PQC algorithm when connecting to a server or TLS endpoint using ML-KEM.

Several browsers are already utilizing PQC in a hybrid fashion, meaning that the Certificate will still be validated with tools designed before the quantum threat, yet are still known to be quantum-resistant and utilize the PQC encryption if both server and browser clients support it. Cloudflare estimates that currently 2% of their traffic is PQC-resistant on their network. Major computing centers such as Google have been utilizing PQC on their internal networks since 2022.

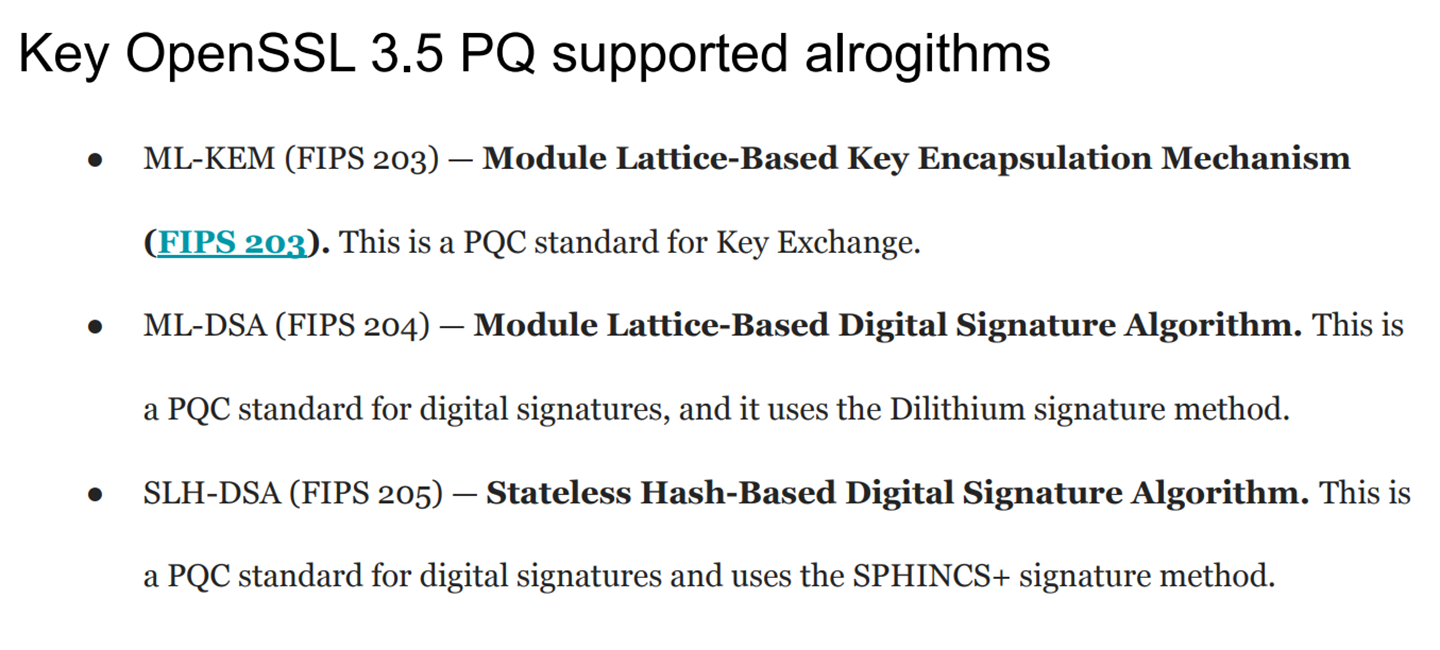

The new set of post-quantum algorithms have been designated FIPS ID numbers for tracking in compliance audits where data requires use of these new algorithms. These numbers are listed in Table 3.

Key OpenSSL 3.5 PQ supported algorithms

IANA and the OpenSSL organization are setting the naming conventions of new PQ algorithms similar to the naming conventions for cipher suites used in the past.

Certificates: DV, OV, EV — and Expiry Math

Trust and Privacy on the internet involves not just technical methods, but social trust methods. SSL Certificates used for websites, TLS endpoints, and code signing use two methods, and Certificate Authorities (CAs) provide for 3 different levels of Certificates.

A lot of us use Let’s Encrypt, and that’s perfectly fine for most sites. But it’s worth knowing that the Domain Validation (DV) certificates offered by Let’s Encrypt are basic — good just for personal projects and internal tools. Organizational Validation (OV) and Extended Validation (EV) certificates are recommended for banks, public services, and anywhere with identity needs beyond DNS.

Also: even a 10-year cert purchase needs to be reissued every 13 months. That’s the way the ecosystem handles expiry and trust. Our Internet trust is based on both technical standards and social trusts, and Certificates that are OV and EV, whether used for websites or code signing purposes, go through more stringent auditing than DV certificates.

If you don’t know what your certs are doing, I highly recommend SSL Labs, SSLScan, and similar command-line tools.

Compliance: PCI, HIPAA, FIPS — and the Real Costs

If you’re working in healthcare, fintech, or gov contracts, compliance isn’t optional.

I broke down in the talk how FIPS 140-2 / 140-3, FedRAMP, and other standard frameworks define cryptographic validity — and how much it can cost to certify a distro or product.

Here’s the short version: millions. Over time, but still.

That’s why most people opt for validated modules from Red Hat, Rocky, SUSE, Alma, Ubuntu, etc. You still get enterprise support, and someone else takes care of the certification treadmill (and extensive fees).

Backward Compatibility (and Grandma’s Browser)

Why not just move to TLS 1.3 and forget 1.2?

Because real users don’t all run the latest OS. Maybe it’s an Android 5 phone. Maybe it’s a kiosk on Windows 7. Maybe it’s your grandma’s iMac that still works.

Supporting both TLS 1.2 and 1.3, with smart cipher selection, keeps things secure and usable.

Elliptic Curve cryptography (ECC) helps here too — it’s lighter on CPU and faster on mobile devices than the classic RSA algorithm, especially when extending RSA to 4096 bits. Over half of the traffic on the internet is from mobile devices, so algorithms’ CPU loads are of great concern for both global energy usage and the battery life of portable devices.

Ready to Upgrade?

I shared this checklist live. It’s a good summary:

- Upgrade to OpenSSL 3.5 if possible, on servers that you manage.

- Use TLS 1.3, falling back to 1.2 when necessary.

- Know your cert type (DV/OV/EV).

- Scan your site using a command-line tool such as SSLScan or a web-based tool.

- Don’t leave port 80 open (for unencrypted web traffic) unless you really know why.

- Watch NIST PQC standards (ML-KEM, HQC, etc.).

- Expect hybrid deployment to be the norm during 2025–2030.

Beyond the Talk

I’ve been working in security, DevSecOps, and networking since the ‘90s. And I’ll be honest: post-quantum crypto is the first thing in quite a while that feels like it’s rewriting the rulebook.

We’re lucky in FOSS: we can test, share, and adapt ahead of the curve. But we’ve got to show up. And we’ve got to prepare — not just patch.

Thanks go to LinuxFest Northwest, to everyone who came to the session, and to LPI for the space and support. Let’s build a future-proof web.

Slides & Tools available: https://slatey.org/LFNW_2025_Securing_Your_Web_Server.pdf, with link to LFNW 2025 Youtube video here: https://www.youtube.com/watch?v=N4v4eM_tK4E.

LPI proudly supported LFNW25. Read Ted’s full report HERE. LPI is already working with the LFNW team to support Linux and Open Source in the area towards the 2026 edition.

Want to talk or add your experiences here? Do you want to know more about how you can help the LFNW community? Leave a comment or email me at tmatsumura@lpi.org.