Xen Virtualization and Cloud Computing #04: Containers, OpenStack, and Other Related Platforms

Previous articles in this series introduced virtualization, discussed how Xen’s architecture provides it, and covered interesting Xen features. This article looks at some other platforms with which Xen interacts: containers and OpenStack.

Virtualization, containers—or both?

Containers are a mechanism for isolating processes, similar to virtualization. With Docker and Kubernetes, containers have became popular over the past decade and are considered by some people to be competition for virtualization. So I’ll discuss the relationship briefly.

Container technology was born in 1979 with Unix version 7 and its chroot feature. A command called chroot, which stands for “change root directory,” isolates a process and restricts its access to a specific directory. For instance, if you change a process’s root to /tmp/local23, and the process tries to run /usr/bin/python3, it will actually run /tmp/local23/usr/bin/python3.

This technology was hard to use and didn’t seem to have much application, so it was mostly forgotten. But in 2000s, the idea became more popular with the introduction of FreeBSD Jails. This mechanism partitions a FreeBSD into several independent mini-systems that share the same kernel. Sun Microsystems entered the container era in 2004 with Solaris Containers (also called Solaris Zones), which combined system resource controls with boundary separation.

In 2001, this jail system was introduced into Linux with Linux Vserver, and in 2005, with OpenVZ, this technology was added to the Linux kernel. In 2006, Linux introduced control groups (cgroups) with accounts, resource limits, and isolation of resources such as CPU and memory. Two Google engineers, Paul Menage and Rohit Seth, extended the concept with a mechanism called “process containers.” Finally, in 2008, LXC (Linux Containers) was introduced as virtualization at the operating system level. The reliability and stability of LXC persuaded developers to build other container technologies on it. The first of these was Warden in 2011, superseded by Docker in 2013.

Docker was a revolution in container technology. It offers a GUI interface and is easy to use. After Docker, another technology with the name rkt (pronounced Rocket) tried to improve on some of the gaps in Docker.

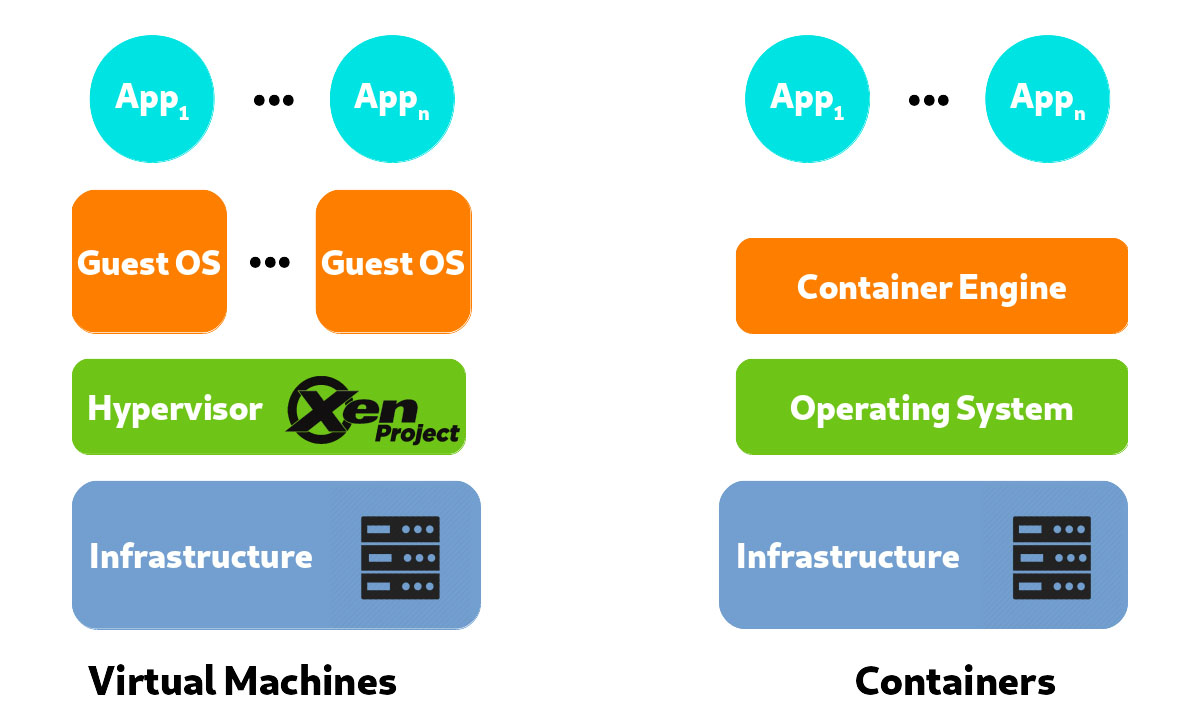

Basically, container technology is a method of packaging an application so it can run with isolated dependencies. Whereas virtual machines run their own kernels and look like complete computer systems, containers run individual processes and share the host OS through the help of container engine. Containers are lightweight and deploying them is now fast and easy. They are highly suitable for short-term applications, and when the users’ biggest priority is to run the maximum number of applications on a minimal number of servers.

Figure 5 compares containers and virtualization.

Despite the benefits of the containers, they also have disadvantages. I’ll mention some here:

- All containers on a host must be built to run on the same OS. If you want a container with a different OS, you need a different host machine. With some tricks, though, it’s possible to support applications from different OS. For example, brandZ (which stands for “branded zone”), from Oracle Solaris, added support for the Linux system call interface into the Solaris kernel so it can run native Linux applications. Such workarounds have limitations.

- As said earlier, the OS is shared, so any security hole in the OS kernel affects all containers on the host.

- If the application need the full functionality of a dedicated operating system, it can’t run in a container.

- Containers aren’t suitable for applications that need to be used for an extended period of time.

The sudden ascendance of Docker unfortunately led some people to think that containers are a new technology, and even worse, that containers are a superior replacement for virtualization. As time passed, most understand that these are different technologies with separate reasons for use. In some cases, a combination of these technologies is useful. We’ve seen uses for a single container inside a virtual machine, multiple containers inside a virtual machine, and even a virtual machine inside of a container.

The Xen Project and OpenStack

As we have seen, the Xen Project is the engine behind many cloud companies. Without it, some projects like AWS wouldn’t have been possible. Rackspace is one of the major companies that use Xen. In October 2010, with the help of NASA, Rackspace started a project with the name OpenStack that became to the one of the most successful cloud platforms in the world. By now, more than 500 companies have joined this project.

The goal of the OpenStack project is to provide an open source cloud computing platform that is easy to implement, but highly scalable and can meet the needs of public and private clouds of any size. OpenStack provides a modular cloud infrastructure that runs on standard hardware, along with an enormous set of tools for management and orchestration. OpenStack is kind of virtualization management platform that sit on top of the virtualized resources and helps you to automate processes. You can use the virtual resources to run a combination of tools: for instance, configure a network, create pooled resources, put up a user interface, etc.

The Xen Project supports OpenStack Through a toolstack called XAPI, Xen exposes an API called XenAPI to control the hypervisor. OpenStack has a XenAPI driver to control XAPI, so all XenAPI managed servers could be used with OpenStack. XAPI is the preferred mechanism for supporting Citrix Hypervisor (XenServer).

Xen is integrated with OpenStack via the standard libvirt API for managing virtualization platforms. With Xen, the minimum level of libvirt you should use is 1.2.9.

The next component in this series wraps it up by looking at unikernels and other emerging advances in virtualization.