Xen Virtualization and Cloud Computing #02: How Xen Does the Job

This is the second article in a series. The first introduced virtualization and the Infrastructure as a Service (IaaS). This article explains how Xen uses different types of virtualization to achieve efficient isolation of virtual machines (VMs).

Hardware and operating system support

Xen runs as host on a number of Unix-like systems and GNU/Linux distributions. It is distributed with several Linux distributions by default, including, Debian, Ubuntu, openSUSE, and CentOS. Other GNU/Linux distributions can compile Xen from source code. Other Unix flavors where Xen can be installed as host are FreeBSD, NetBSD, Solaris, and OpenSolaris-based.

Although macOS is a Unix-based operating system, it is proprietary and offers licenses to a limited set of virtualization products. So Xen does not run on macOS.

Operating systems supported as guests on Xen include GNU/Linux, FreeBSD, NetBSD, OpenBSD, MINIX, and Windows OS.

The Xen Project currently supports the x86, AMD64 and ARM architectures, and a port to RISC-V has been started as of the time of writing this article. RISC-V is an open-source architecture with the potential to be an excellent platform for embedded systems, and can support 32-bit, 64-bit and 128-bit words. A RISC-V port will greatly extend the reach and use cases for Xen

Virtualization and paravirtualization

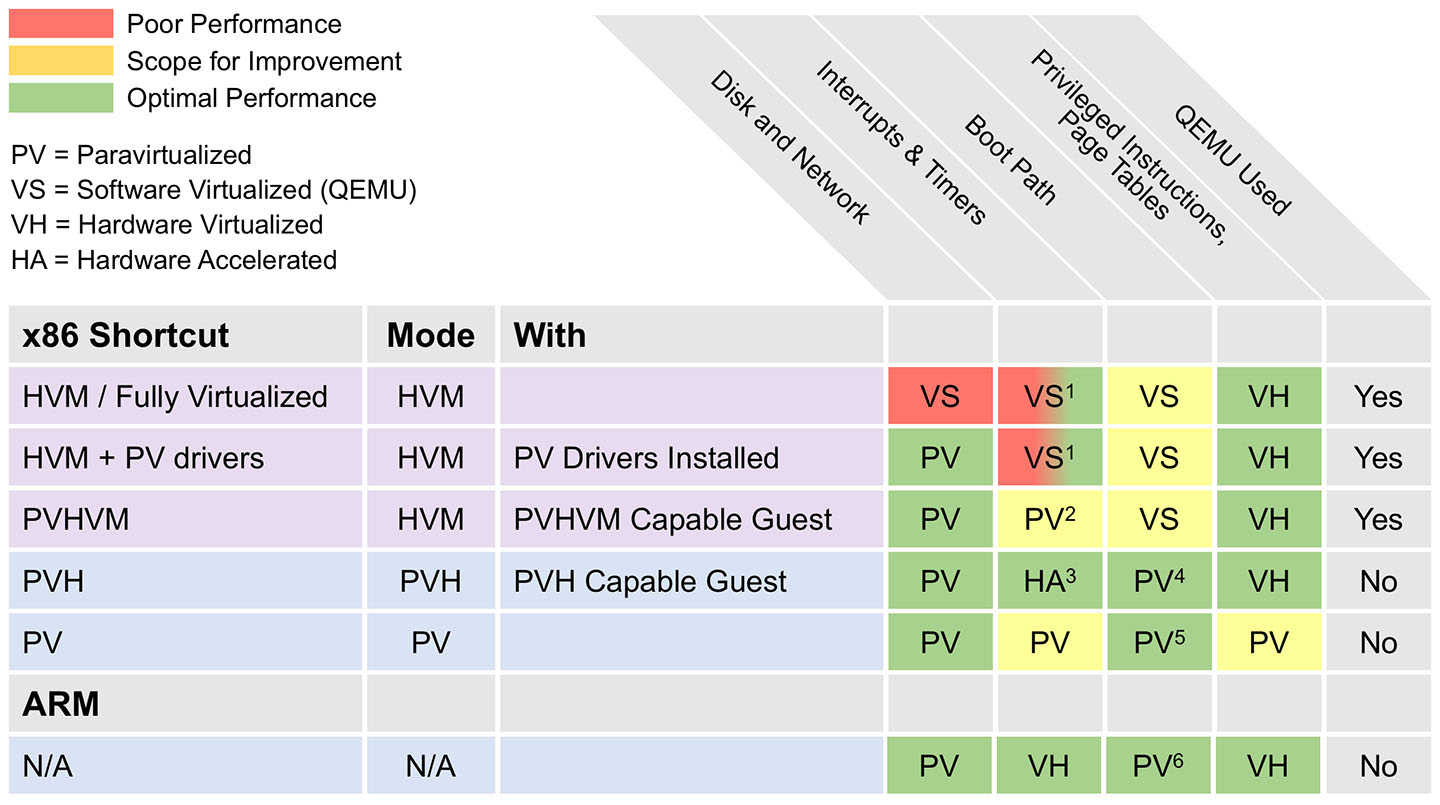

Xen offers five types of virtualization for running the guest operating system:

• PV (Paravirtualization)

• HVM (hardware virtual machine)

• HVM with PV drivers

• PVHVM

• PVH

I’ll briefly describe each type, because we’ll see the implications of their differences later in this article.

Paravirtualization (PV)

Paravirtualization is a concept introduced by Xen Project. It involves rewriting part of the guest operating system, and therefore is also called a modified guest. The new kernel code replaces nonvirtualizable instructions with hypercalls into an application binary interface (ABI). The normal goal of virtualization is to fool the guest OS kernel into thinking it’s running on the real hardware. But in paravirtualization, the guest OS knows that it is running in a virtual machine and cooperates with the hypervisor to get access to the actual hardware. In particular, the hypervisor contains a set of paravirtualized (PV) drivers that the guest loads instead of the actual hardware drivers. The guest OS asks the hypervisor to perform functions that would normally require direct access to hardware: e.g., programming the MMU, accessing certain CPU registers, etc. It is much easier for the hypervisor to translate these calls than to emulate hardware devices and network interfaces to guests.

The result of PV is a very lightweight and fast hypervisor, without the difficulty of providing native OS interfaces. Xen can offer PV even on old CPUs without any support for virtualization.

Hardware-assisted Virtualization (HVM)

In 2005 and 2006, Intel introduced hardware virtualization support for their CPUs, and was shortly followed by AMD. At first, this feature was limited and suffered from low speed. AMD developed the virtual machine capability in its AMD64 CPU family that offered VM instructions under the name AMD-V. Intel provided the same feature under the name VT-x along with another technology called VT-d that allow you to pass through instructions for devices, such as PCI devices, to the guest OS.

Both technologies were invented to solve the performance issues caused by software emulation. Handling these tasks through these CPU extensions improved performance, just as other hardware advances such as network routing and encryption have sped up critical operations. However HVM just simplifies virtualization of the processor, leaving many OS-related features that the hypervisor must virtualize.

HVM, unlike PV, allows for unmodified guests. So proprietary operating systems such as Microsoft Windows can take advantage of HVM’s fast virtualization. In particular, because Microsoft Windows is a closed source OS, it originally could not run on a paravirtualized Xen hypervisor, and had to rely on slower emulation. However, because Windows allows loading of third-party drivers, it is possible now to load drivers that can determine whether the instance of Windows is running on Xen. If so, the Windows system uses paravirtual channels to improve the performance of I/O.

With HVM, Xen uses Qemu emulation for disk and network IO. Regular Paravirtualization does not use Qemu.

HVM with PV drivers

In a fully virtualized system, the interfaces that the hypervisor must provide for the network and disks are complex. Because all modern kernels have ways to load third-party device drivers, the hypervisor can be enhanced with disk and network drivers that use these paravirtualized interfaces. The other name of this technique is full virtualized system with the PV drivers. As you can guess, it is a step toward virtualization with higher performance.

PVHVM

PVHVM (also know as “PV on HVM” or “PV-on-HVM drivers”) is a mixture of paravirtualization and full hardware virtualization. It’s a stepping stone between HVM with PV drivers and PVH. The goal of this technology is to boost performance of fully virtualized HVM guests by including optimized paravirtual device drivers. These drivers bypass Qemu emulation for disk and network IO. They also support CPU functionality like Intel EPT or AMD NPT. The result is much faster disk and network IO performance.

PVH

PVH is a new kind of guest that has been introduced on Xen 4.4 as a DomU, and on Xen 4.5 as

a Dom0. (DomU and Dom0 are described in a later article in this series.) PVH occupies a place between PV and HVM. The guest OS is aware that it is running on Xen and calls the paravirtualized drivers offered by the hypervisor. But some operations still require the Qemu emulator. Specifically the OS is still booted using the hvmloader and firmware that require emulator support.

PVH can be seen as a PV guest that runs inside of an HVM container, or as a PVHVM guest without any emulated devices. It provides the best performance currently possible and calls for fewer resources on the guest OS than pure PV.

The first version of this innovation, PVHv1, did not simplify the operating system. The second version, PVHv2 (also called HVMLite), is a lightweight HVM guest that uses hardware virtualization support for memory and privileged instructions, PV drivers for I/O, and native operating system interfaces for everything else. PVHv2 does not use QEMU for device emulation. In Xen 4.9, PVHv1 has been replaced with PVHv2, which requires guests running Linux 4.11 or a newer kernel.

Comparison of the Xen technologies

Figure 2, from the Xen Project website, shows how each aspect of virtualization is handled by these different technologies.

Running one VM Inside Another

Some sites want to run one VM inside another, in order to test a variety of hypervisors. Running a hypervisor inside of a virtual machine is called nested virtualization. The main hypervisor that runs on the real hardware is called a level 0 or L0; the hypervisor that runs as a guest on L0 is called level 1 or L1, and finally, a guest that runs on the L1 hypervisor is called a level 2 or L2. This technology has been supported in Xen since version 3.4.

Components of the Xen hypervisor

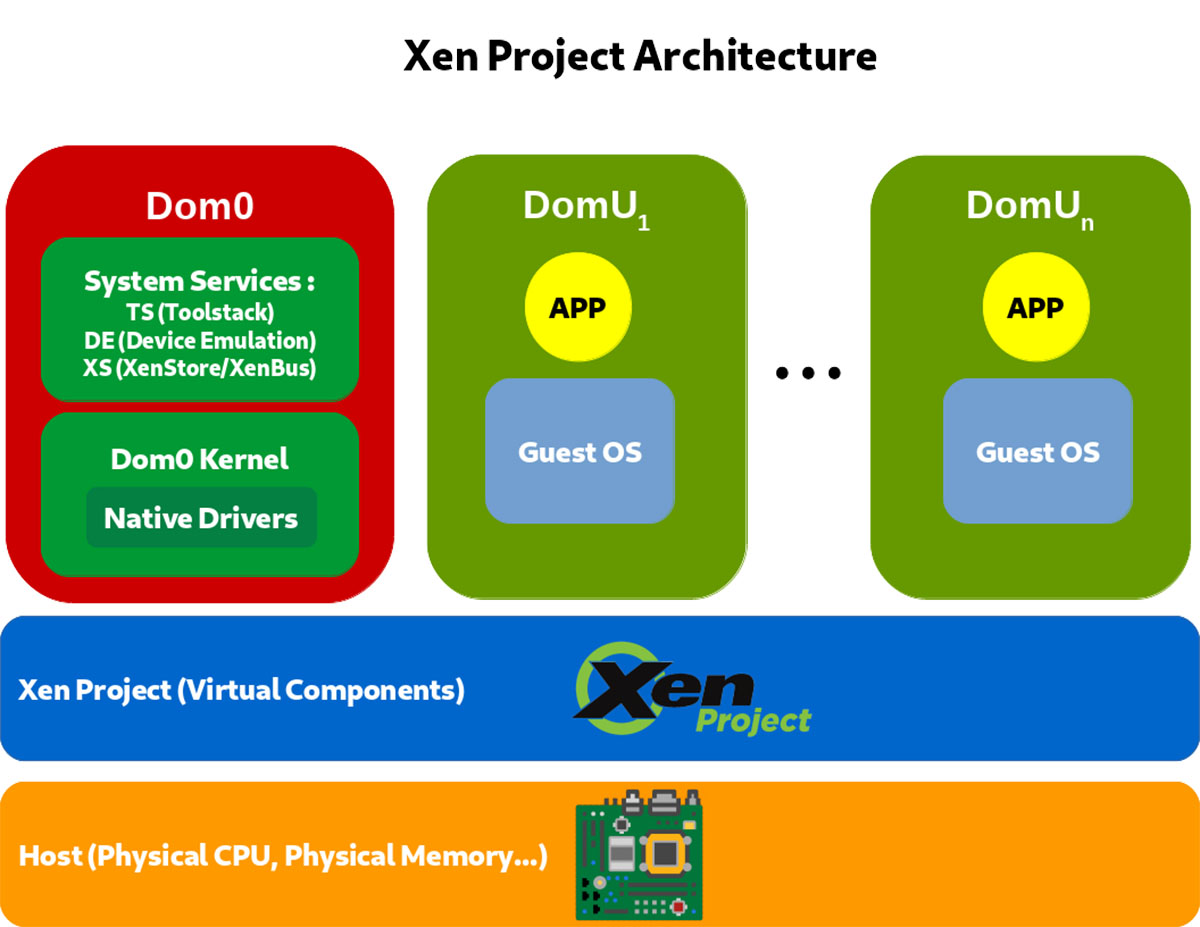

As explained, Xen is a type-1 hypervisor that runs directly on the hardware and handles all its resources for the guest, including CPU, memory, drivers, timers, and interrupts. After the bootloader, Xen is the first program that runs. Xen then launches each guest.

Just as operating systems commonly separate the root user or superuser from other users, and give the root user special powers and privileges, Xen distinguishes the difference between the host and the guests by defining domains; each domain has access only to the resources and activities allowed to that guest. Each guest runs in a DomU domain, where the U stands for unprivileged. In contrast, a single host operating system runs in a domain called Dom0.

Dom0 is a privileged domain with direct access to the hardware. Dom0 handles all access to the hardware and I/O and manages them on behalf of the users’ VMs. This domain also contains the drivers for all the hardware devices on the system, and tools to manage the Xen hypervisor.

The separation between Dom0 and DomU allows VMs to run and use all system services without privileged access to the hardware or I/O functionality—even though they think they have that access.

Figure 3 shows the Xen hypervisor architecture.

The system services shown in the Dom0 box of Figure 3 include:

- A Toolstack for the Xen administrator. The Toolstack provides a command-line console or a graphical interface for creating, configuring, monitoring, managing, and removing the virtual machines.

- Device emulators, which are exposed to the guest OS in each VM. Thus, each guest issues calls and control instructions to the emulator, thinking it is interacting directly with a device.

- XenBus, a software abstraction that allows VMs to share configuration information. VMs do this through access to a shared database of configuration information called XenStore,

The standard setup for Dom0 contains the following functions:

- Native Device Drivers

- System Services

- Virtual Device Drivers (backends)

- Toolstack

The Toolstack can be a command line console or a graphical interface.It allows a user to manage, create, remove, and configure the virtual machines.

Although Dom0 is normally the hub of Xen virtualization, controlling all other components, we’ll see later in this series that a Dom0-less architecture is possible for specific purposes.

The Xen hypervisor supports three technologies for I/O Virtualization: The PV split driver model, Device Emulation Based I/O, and Passthrough. Explaining these technologies lies beyond the scope of this article. I’ll just say a bit about Passthrough, which gives the VM access to the physical devices. For example, you can use PCI passthrough to give a VM direct access to a NIC, disk controller, HBA, USB controller, sound card, or other PCI device. Passthrough may cause some security problems, and comes with limitations. For example, ancillary operations like save, restore, and migration are not possible.

The next component of this series covers the key features in Xen and their contribution to virtualization.

Another great article.

There is an ocean of possibilities to be studied and explored.

Thanks for the contribution.